FedAvg-联邦学习开山之作

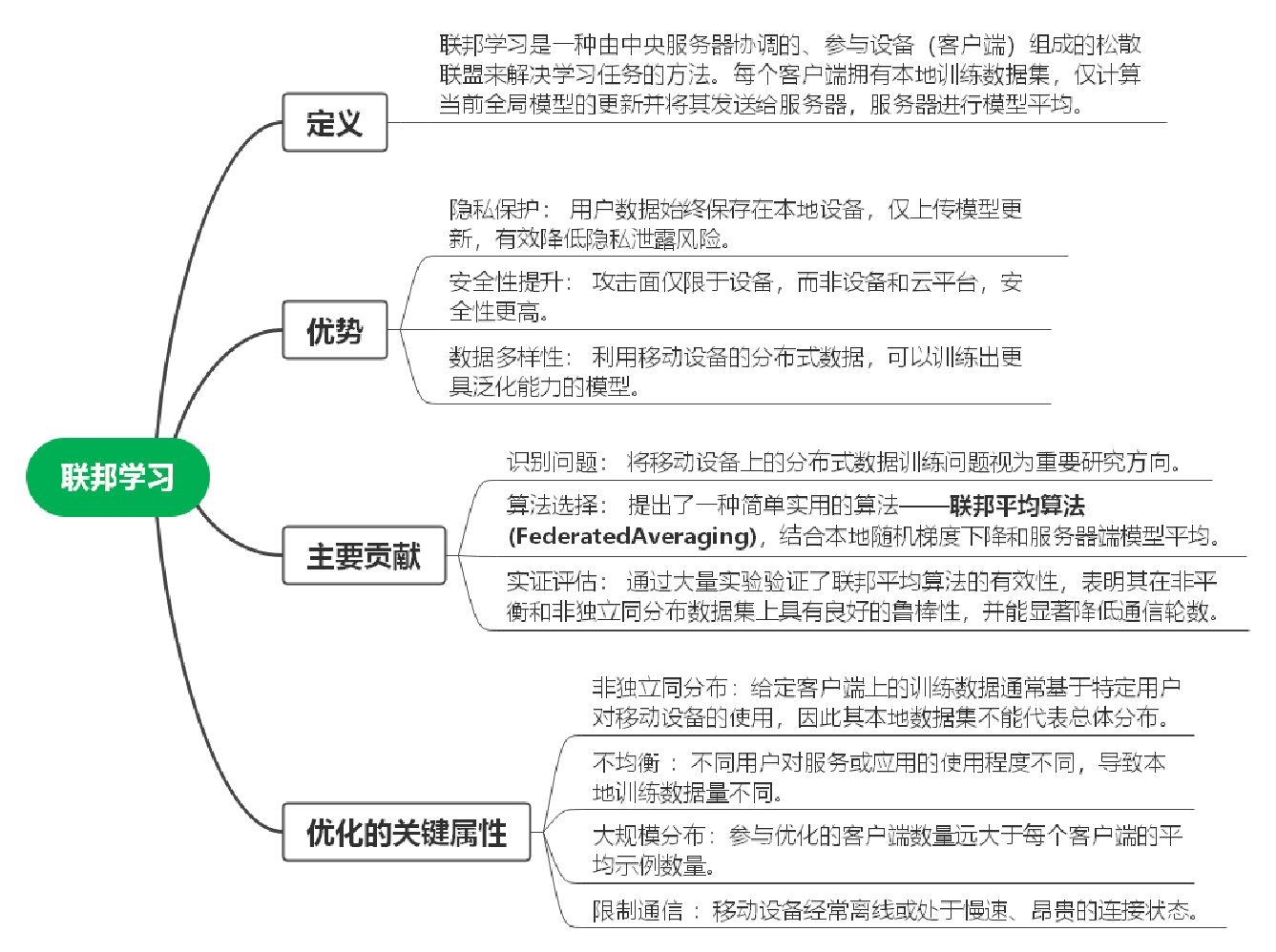

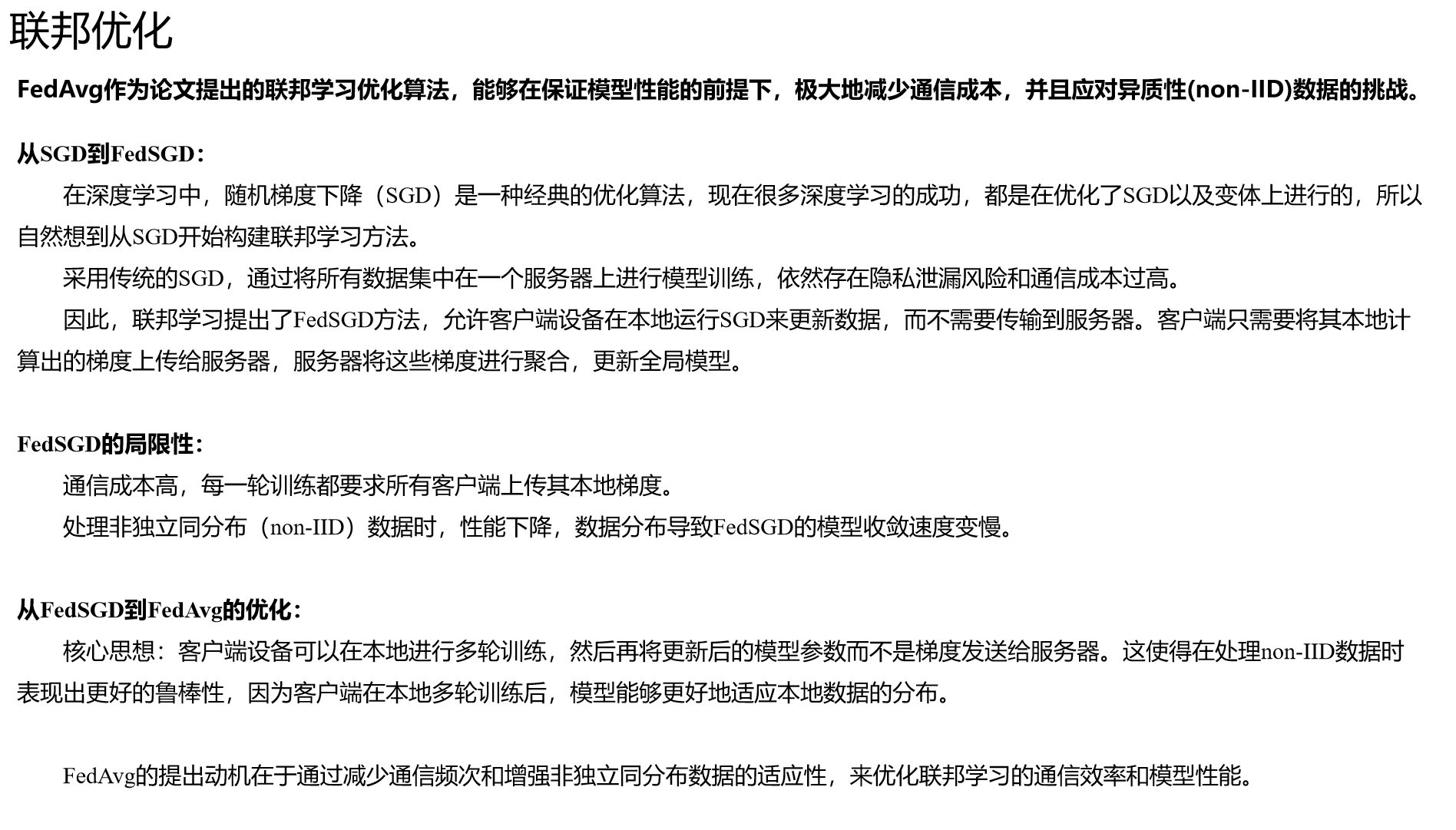

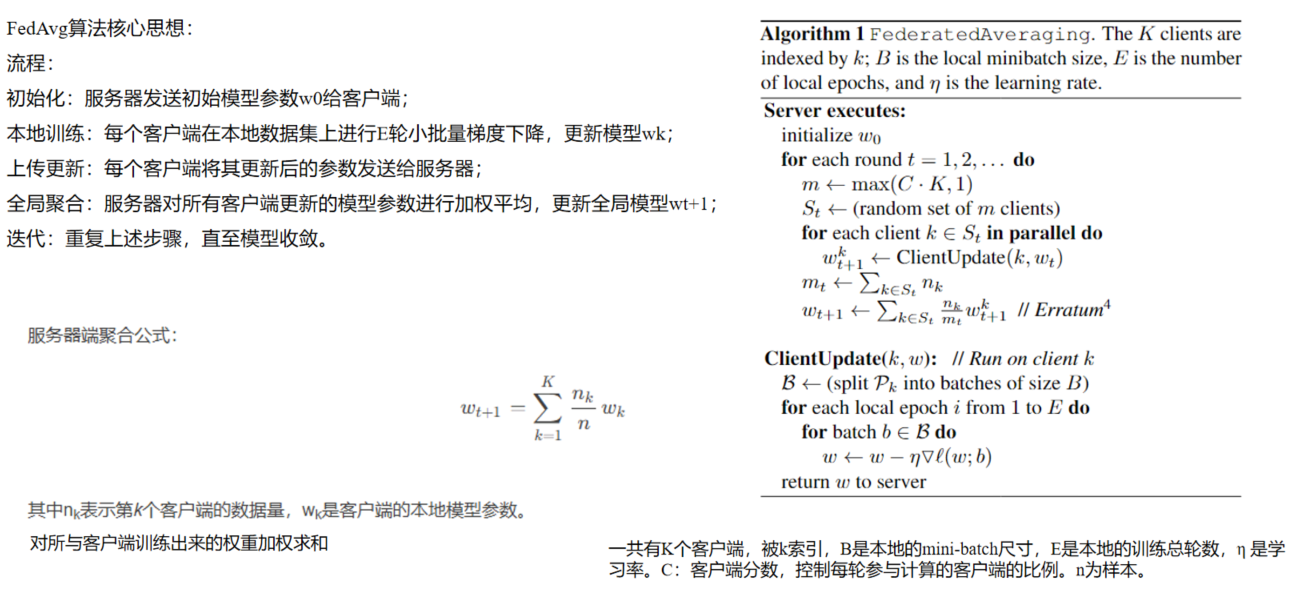

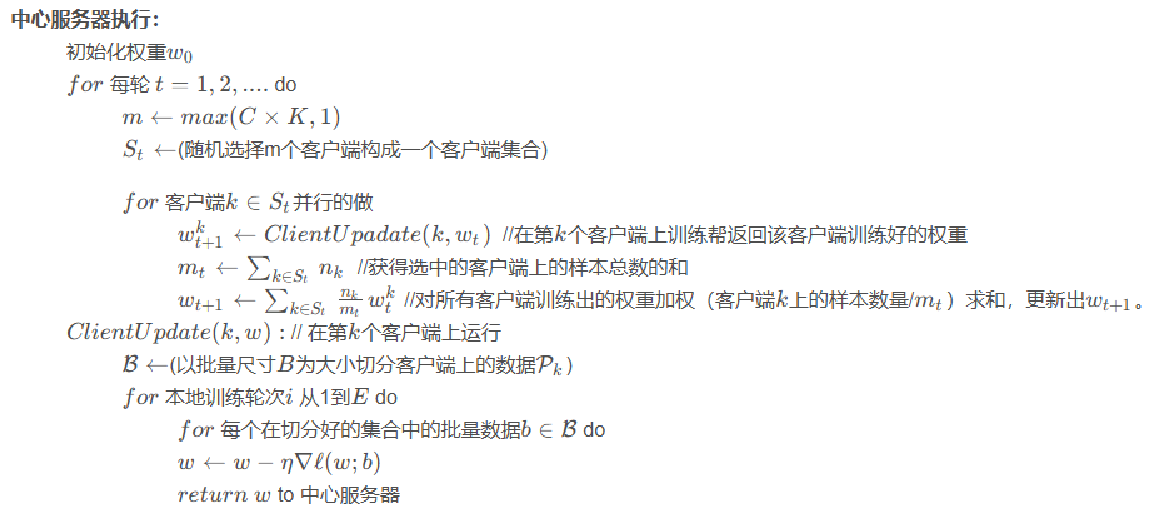

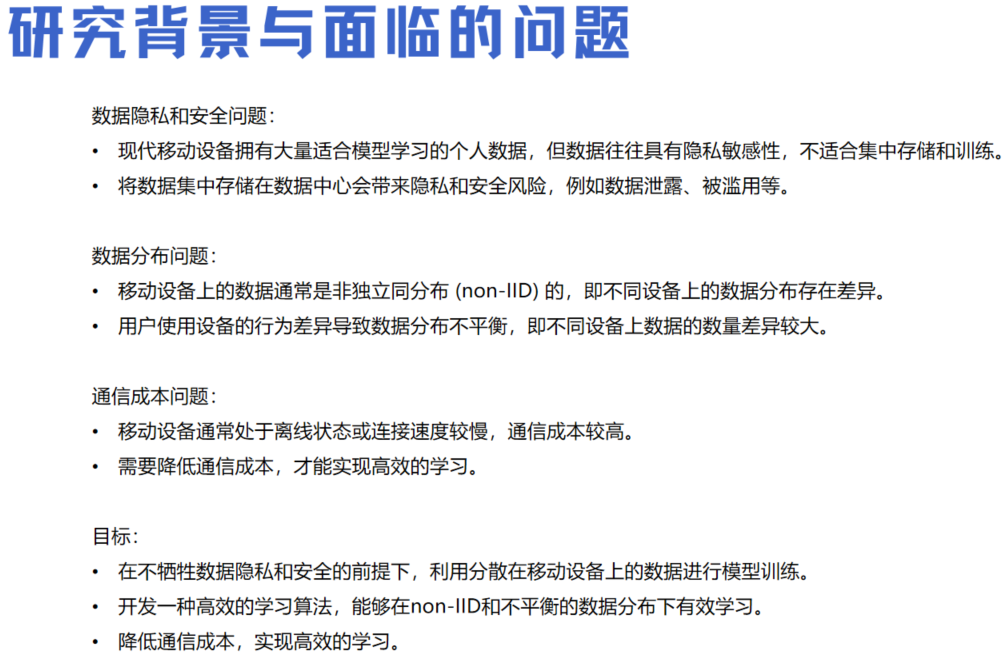

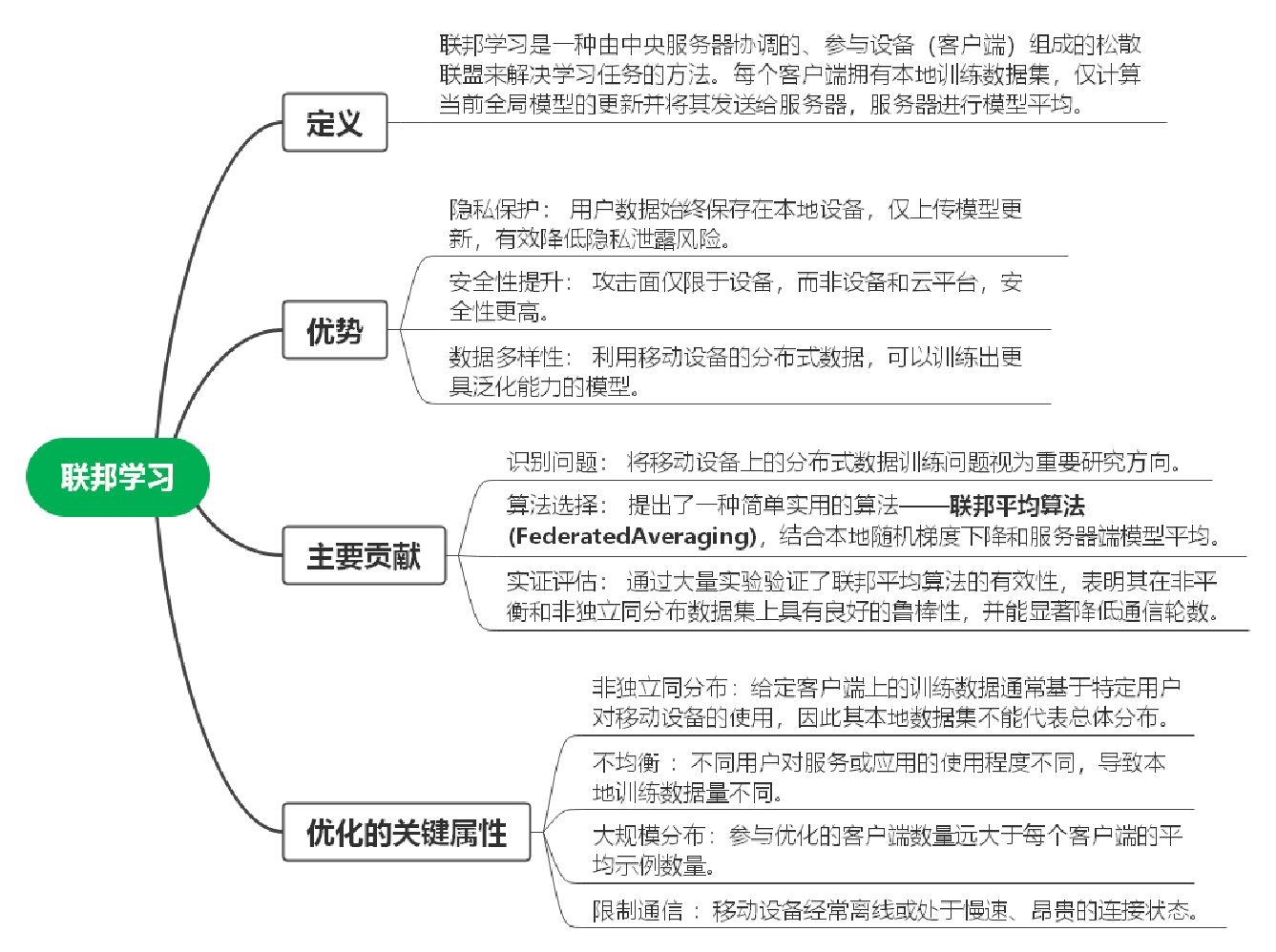

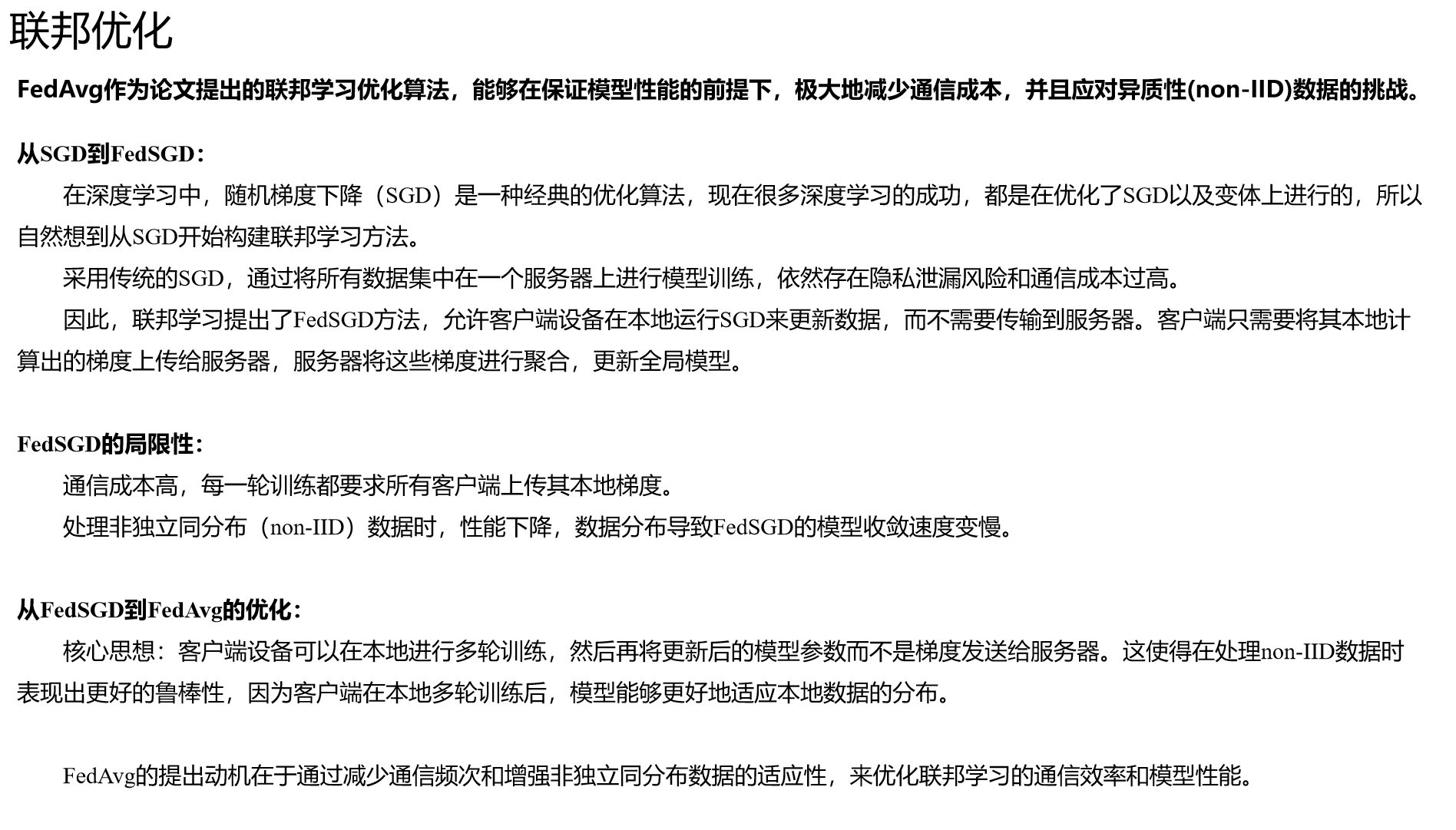

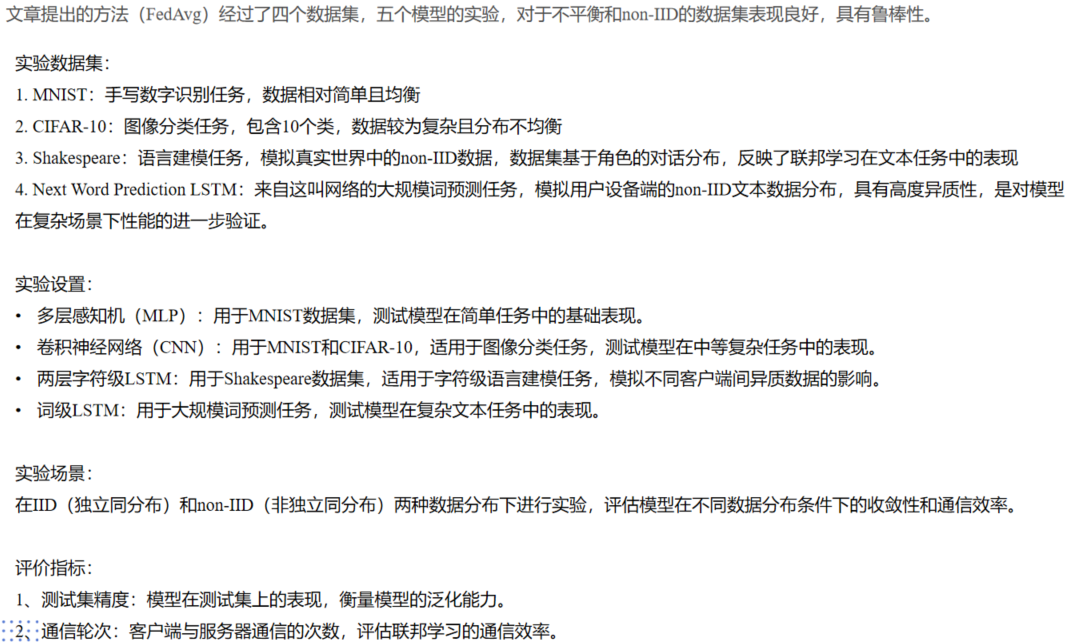

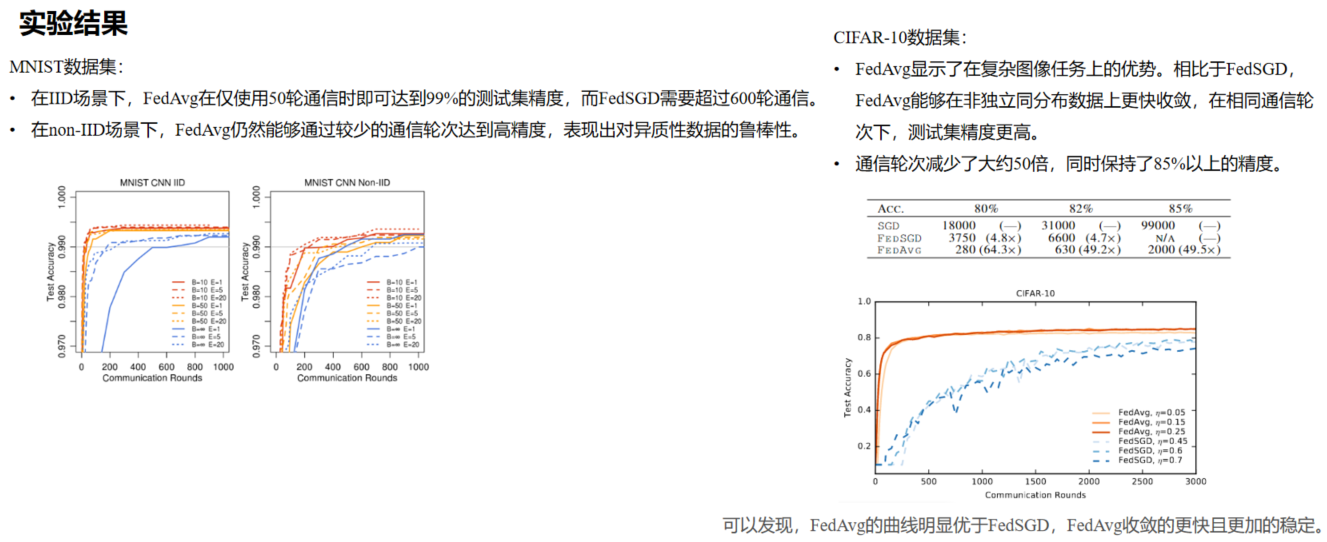

因此,文章提出了一种名为联邦学习 (Federated Learning) 的新方法,将数据保留在本地设备上进行模型训练,而不是传输数据。只需将模型更新发送到中央服务器进行聚合,从而减少了隐私泄露的风险,这显著降低了通信开销。

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 流火七月!

评论

因此,文章提出了一种名为联邦学习 (Federated Learning) 的新方法,将数据保留在本地设备上进行模型训练,而不是传输数据。只需将模型更新发送到中央服务器进行聚合,从而减少了隐私泄露的风险,这显著降低了通信开销。